Projects

Is the use of specialized hardware and software tools to speed up the process of developing data-driven insights and solutions. This approach leverages high-performance computing resources such as graphics processing units (GPUs) and field-programmable gate arrays (FPGAs) to accelerate data processing and machine learning algorithms. It can also involve the use of automated tools and workflows to streamline the data science pipeline, allowing data scientists to rapidly iterate and experiment with different models and techniques.

Analyzing PG&E electric data usage in California for the past 10 years and forecasting the future using time series methods can provide valuable insights for energy companies, policymakers, and consumers.

HDF5 stands for Hierarchical Data Format 5. It is a file format and data model designed to store and manage large and complex datasets. HDF5 files are used in various scientific and engineering applications, including big data, due to their ability to efficiently store and access large amounts of data.

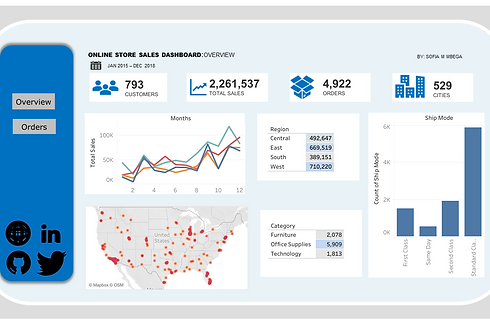

For Business Intelligence tools, I normally work with Tableau. It is a data visualization tool that can be connected to a variety of databases, including SQL Server, Oracle, MySQL, and many more. I can connect Tableau to a database, and create reports, dashboards, and visualizations that are based on real-time, up-to-date data. It makes it easier to make informed decisions and keep up with changing trends and patterns in the data.

.png)